Over the past few years, it seems like the rate at which new CLI tools are being written has picked back up again, accelerating after seeing relatively little activity between ~1995 and ~2015. I’d like to talk about this trend I’ve noticed, where people are rewriting and rethinking staples of the command line interface, why I think this trend might be happening, and why I think this trend is a good thing.

History

The terminal and the command line interface have been staples of computer user interfaces before computer monitors were even available,

with some of the first computers offering an interactive mode in the late 1950s.

The recognizable Linux terminal traces its linage to the very first version of Unix in 1971.

Many utilities that a Linux user interacts with every day,

commands like rm, cat, cd, cp, man, and a host of other core commands trace their initial versions to this first version of Unix.

Other tools are a bit newer, such as sed (1974), diff (1974) bc (1975), make (1976), or vi (1976).

There were a few more tools introduced in the ’90s, such as vim (1991) and ssh (1995), but you get the picture.

The majority of the foundational CLI tools on a Linux pc, even one installed yesterday, are older than Linux itself is.

Ok, so?

Now, there’s nothing wrong with this. The tools still work fine, but in the half-century since they were first written, terminals and the broader Linux ecosystem have all changed. Terminals now have capacity to display more colours, Unicode symbols, and even inline images. Terminal programs now coexist with graphical user interfaces, and only a small subset of computer users even know they exist, whereas in the past, terminals were the only way one interacted with the computer.

These changes to the environment surrounding CLI apps in recent years have led to a resurgence in development of command line utilities. Instead of just developing completely new tools or cloning old tools, I’ve noticed that people are rethinking and reinventing tools that have existed since the early days of Unix.

This isn’t just some compulsive need to rewrite every tool out there in your favorite language. People are looking at the problem these tools set out to solve, and coming up with their own solutions to them, exploring the space of possible solutions and taking new approaches.

Its this exploration of the solution space that id like to take a look at: the ways that tools are changing, why people are changing them, and what kicked off this phenomenon.

The lessons learned from the past

A large amount of the innovation in the area, I think, can be attributed to lessons that have been learned in 50 years of using software: sharp edges we have repeatedly cut ourselves on, unintuitive interfaces that repeatedly trip us up, and growing frustration at the limitations that maintaining decades of backwards compatibility imposes on our tools.

These lessons have been gathering in the collective conciousness; through cheatsheets, guides, and FAQs; resources to guide us through esoteric error messages, complex configurations, and dozens upon dozens of flags.

Id like to go over a couple of the more prominent lessons that I feel terminal tools have learned in the past several decades.

A good out of the box experience

While configurability is great, one should not need to learn a new configuration language and dozens or hundreds of options to get a usable piece of software. Configuration should be for customization, not setup.

One of the earliest examples of this principle may be the fish shell. Both zsh and fish have powerful prompt and autocompletion engines, but zsh requires you to setup a custom prompt and enable completions in order to use the features that set it apart from the competition. With no config file, zsh is no better than bash. When starting fish for the first time, however, its powerful autocompletion and information rich prompt are front and center with no configuration required. Of course, fish still has the same level of configurability as zsh, it just also has sensible defaults.

To demonstrate my point, this is the default prompt for zsh with no configuration.

It only shows the hostname, none of the advanced features you can get out of a zsh prompt even without plugins.

Here is bash’s prompt.

It actually gives more info than zsh’s, even though zsh can do more when properly configured.

Here is bash’s prompt.

It actually gives more info than zsh’s, even though zsh can do more when properly configured.

And here is fish’s default prompt.

It has a few colours, shows everything the bash prompt does, and additionally shows the git branch we are on.

And here is fish’s default prompt.

It has a few colours, shows everything the bash prompt does, and additionally shows the git branch we are on.

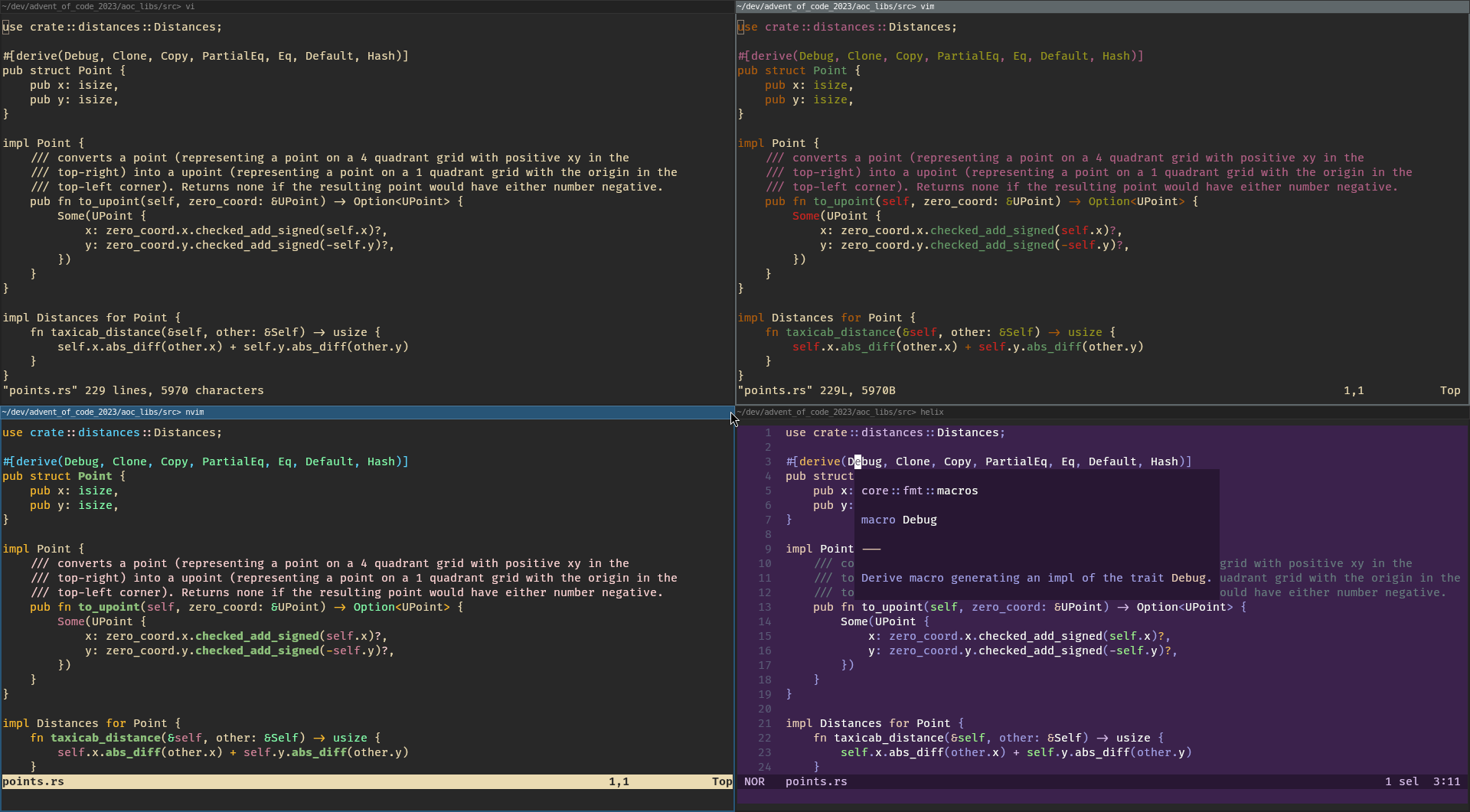

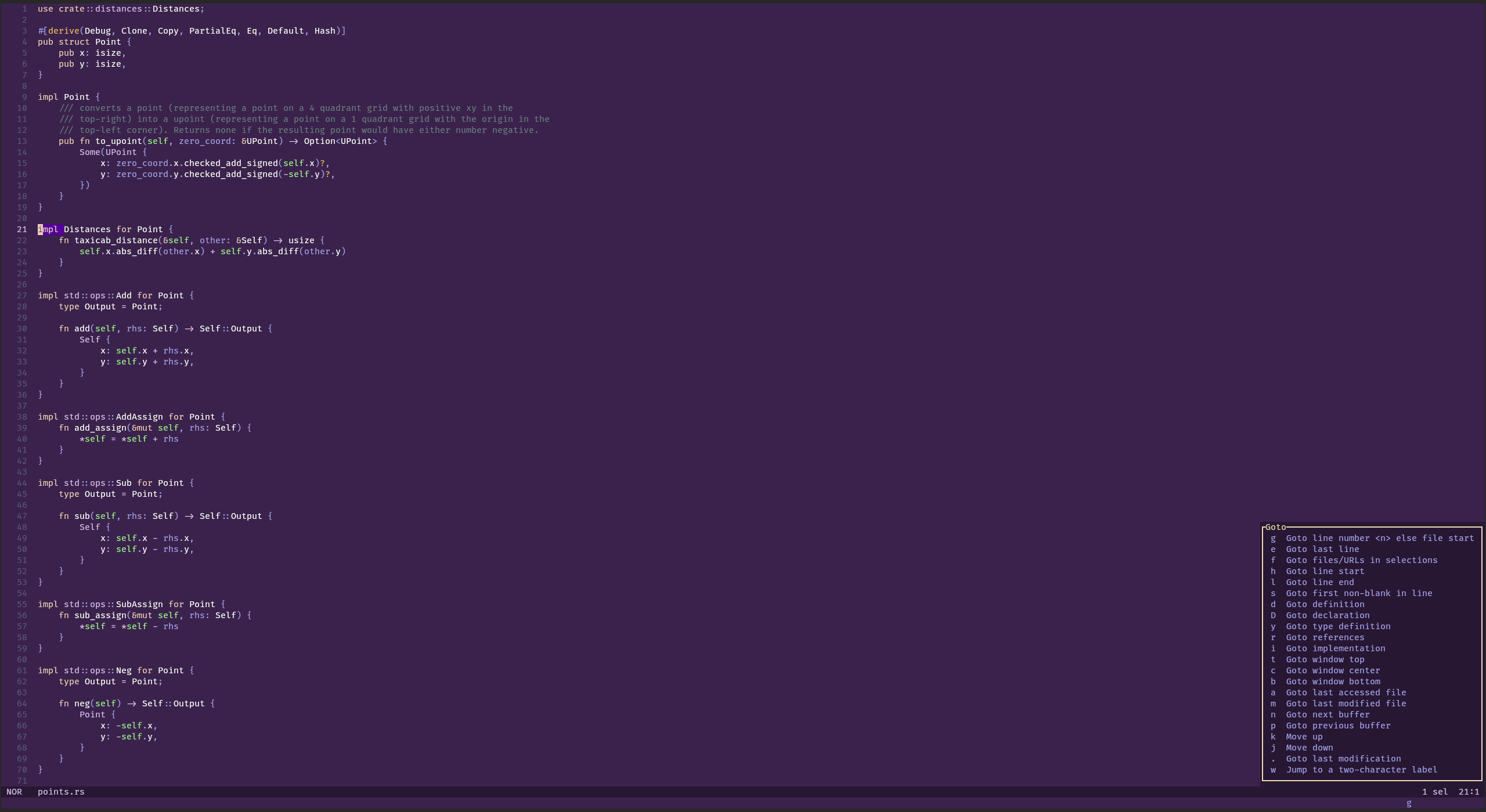

Text editors are another great example of the evolution of out of the box defaults. Vim and Neovim both improved on their predecessors, but much of that improvement is locked behind extremely complex configuration experiences and plugins. Here’s four different terminal text editors with no configuration applied:

Vi, (top left) is our baseline, and, as far as I can tell, doesn’t actually support much for configuration. What you see out of the box is more or less whats there.

Vim (top right) greatly improved on Vi, adding things such as syntax highlighting, line numbers, spellchecking, split windows, folding, and even basic autocompletion.

However, everything but syntax highlighting is either extremely clunky or outright disabled without configuration.

For example, the earliest things I did when I first made a .vimrc was to enable indent folding,

make some better keybinds for navigating windows, and adding a line number ruler to the side.

Neovim (bottom left) further improved on Vim, adding support for Treesitter and the Language Server Protocol, but the out of the box experience is the exact same as vim! In order to take advantage of the LSP and Treesitter support, you have to install plugins, which means learning a Nvim package manager, learning how to configure LSPs, and configuring a new LSP for every language you want to use it with or finding out about Mason and being OK with having multiple levels of package management in your Nvim install alone. Don’t get me wrong: Neovim is a great editor once you get over the hump. I still use it as my daily driver, but so much of its functionality is simply hidden.

Then we have the Helix (bottom right) editor. Slightly glaring default colour scheme aside, everything is just there. Helix doesn’t have plugin support yet, but it has so much stuff in core that, looking through my neovim plugins, pretty much all of them are in the core editor! Ironically, the one feature that I feel helix is missing, folding, is a core part of neovim, albeit one that requires some configuration to get good use out of. Helix does have a config file where you can change a huge amount of settings, but its an extremely usable IDE out of the box thanks to having all of its features enabled by default.

Helpful error messages

When the user does do something wrong, it is vital to let them know exactly what, where, and how it went wrong,

and if at all possible, what action the user can do to fix it.

Operation Failed, Error or syntax error on their own are horrible error messages.

They tell you that something somewhere failed, giving almost no information the user can use to troubleshoot.

In the worst case, they can even point you in a completely different direction than what is actually needed to fix things.

Git is a good example of this. As much as I love git, sometimes its error messages are the opposite of helpful.

To borrow an example from Julia Evans,

if you run git checkout SomeNonExistantBranch, you get:

error: pathspec 'SomeNonexistantBranch' did not match any file(s) known to git.

This is confusing because you are trying to checkout a branch, you arent thinking about files.

Another example, I covered before is the contrast between Bash and Nushell. Consider the following script:

$ for i in $(ls -l | tr -s " " | cut --fields=5 --delimiter=" "); do

echo "$i / 1000" | bc

done

This gets the sizes of all the files in KiB. But what if we typo the cut field?

$ for i in $(ls -l | tr -s " " | cut --fields=6 --delimiter=" "); do

echo "$i / 1000" | bc

done

(standard_in) 1: syntax error

(standard_in) 1: syntax error

(standard_in) 1: syntax error

(standard_in) 1: syntax error

(standard_in) 1: syntax error

(standard_in) 1: syntax error

(standard_in) 1: syntax error

(standard_in) 1: syntax error

(standard_in) 1: syntax error

Due to the syntax error coming from bc rather than bash directly, even the line number it gives you is misleading, and in order to have the slightest clue whats going on, you have to start print debugging.

The equivalent in nushell would be:

> ls | get size | each {|item| $item / 1000}

and the equivilant error would be:

> ls | get type | each {|item| $item / 1000}

Error: nu::shell::eval_block_with_input

× Eval block failed with pipeline input

╭─[entry #5:1:1]

1 │ ls | get type | each {|item| $item / 1000}

· ─┬

· ╰── source value

╰────

Error: nu::shell::type_mismatch

× Type mismatch during operation.

╭─[entry #5:1:30]

1 │ ls | get type | each {|item| $item / 1000}

· ──┬── ┬ ──┬─

· │ │ ╰── int

· │ ╰── type mismatch for operator

· ╰── string

╰────

Though the first error isnt helpful, the second one tells us right away that $item is not what we expect it to be,

hopefully pointing us to the get type mistake.

Nushell’s error messages are miles ahead of other shells just…

being useful, helping you find where you made an error,

rather than just telling you theres an error somewhere.

Concise and discoverable documentation

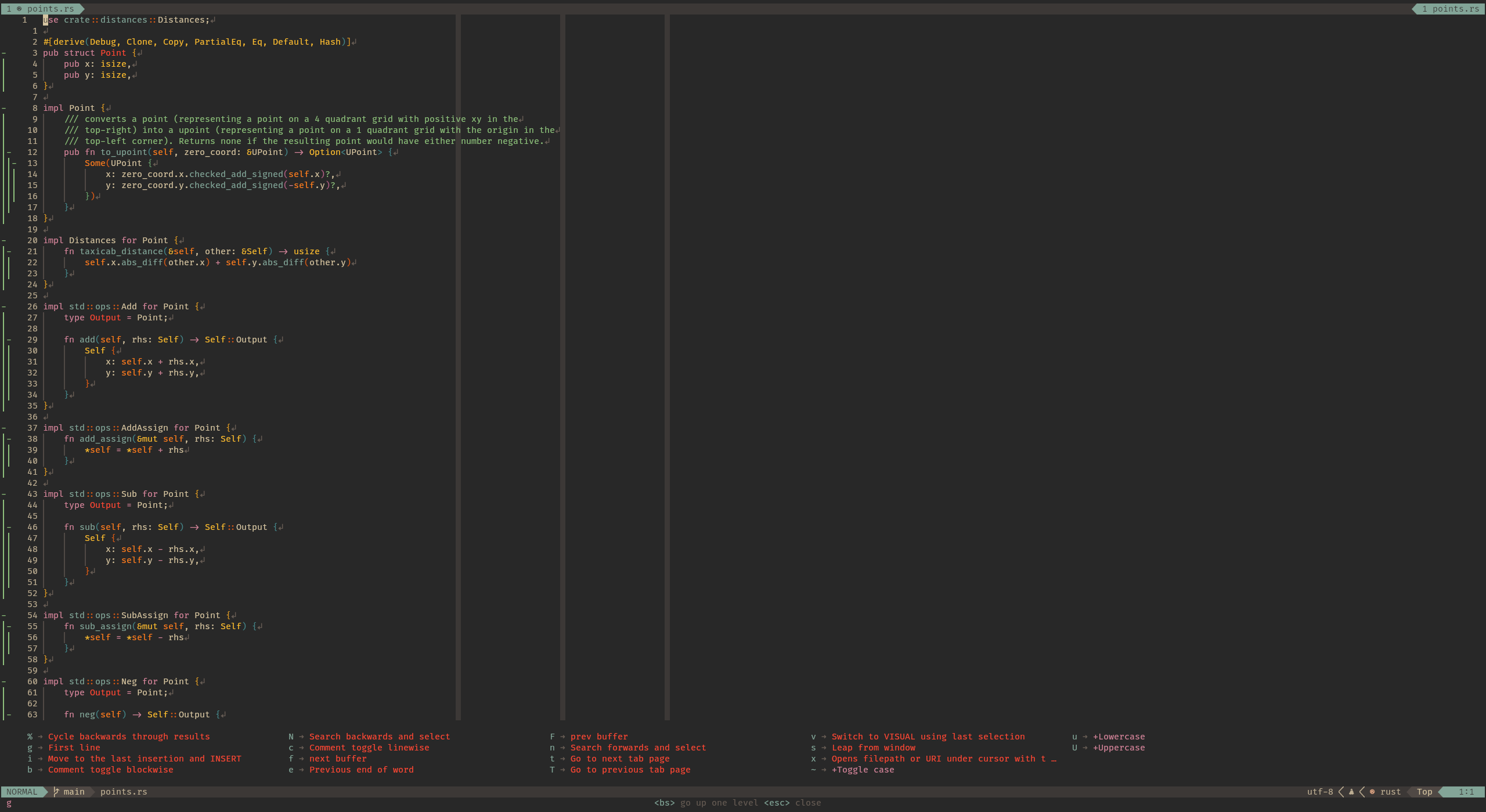

In my Nvim config, I use which-key,

a plugin that displays available keybindings as you type.

I’ve been using vim for almost a decade, including a long time without which-key,

so its not like I never learned the keybindings, but I still find which-key useful.

Why is that, you may ask?

Well, because even though I use (n)vim every day, I don’t use all the keybindings every day.

I might go months between using, for example, dap (delete current paragraph), or C-w x (swap current window for next).

Naturally, when you go months without using certain parts of a program, you tend to forget they exist.

Which-key solves that handily, by offering quick, non-intrusive reminders of what is available.

Here’s what my which-key config looks like:

Now, which-key and its like have been around for a while, but other TUI programs have integrated contextual hints without the need for a plugin. The two that I am aware of are zellij and helix.

Helix both has autocompletion for its built in command line and a contextual hint that appears when you press the first key in a multi key combo. This drastically helps both new and experienced users learn and remember keybinds without making the editor any less powerful.

Zellij has a bottom bar displaying keybindings available in the current mode. This has proven invaluable for me, as I don’t use a terminal multiplexer much (On GUI systems, I use the window manager for managing multiple terminals), and as such tend to forget the keybinds. Though it does take up screen space, and a person who used Zellij every day would most likely disable it, the hints bar is more than worth it for new and occasional users.

Common usecases should be easy

Where possible, documentation should not even be required for the most common use cases.

Whenever I want to use find, I almost always have to first look at the man page,

as I don’t use it quite often enough to memorize it.

But that’s totally unneeded! 90% of my uses of find take the form of find ./ -name "*foo*".

With fd, the exact same invocation is a simple fd foo, dead simple, no man page needed.

Of course, 10% of the time I’m doing something else and have to look at the manual even with fd,

but the point is that manuals are for when you want to do something with the tool that is not the most common usecase.

There are many other examples as well. How many of your grep invocations are in the form of grep -R 'foo' ./?

Most of mine are. Ripgrep shortens that to rg foo

while still having all the power of grep when I need it, and it is faster as well!

This isn’t to say that tools should ‘dumb themselves down’ or hobble themselves to make them easier to use. However they should keep in mind the most common usecase that their tool is likely to be used in, and streamline that usecase.

Shedding historical baggage

Many tools were made for one thing, and over time have evolved into another thing entirely. This can happen by conscious design or, more commonly, from an industry or community picking a tool up and using it for something it was not originally designed for. While hacking tools for uses they were not designed for is always fun and in many cases the only way to do something, its perhaps better to make a dedicated tool when the design choices made for the old usecases start hindering the new usecases.

A great example of this is just, a command runner heavily inspired by GNU make.

Make was (and in large part still is) a C build system.

As such, it includes features such as implicit rules

(if a file called foo.o is needed and there is no explicit rule is there, the C compiler will be invoked on the file foo.c

(there are similar rules for C++ and linking))

and file modification time laziness

(fantastic for a build system, needs liberal sprinkling of .PHONY rules when used as a task runner).

These features are good features when make is being used as a build system,

but another major use of make that has emerged has been as a way to run common tasks.

So alongside make build to build your program, you would have make bootstrap, make test, make config, etc.

This is where the design decisions behind make the build system start to hinder make the task runner,

making one learn about make the build system in order to work around those features to use make the task runner.

However, make cant drop these features, both because projects still actively use make as a build system,

and because even makefiles that are just used as taskrunners still work around the footguns and

would be broken by make making large changes to its syntax and semantics.

However, Just was designed from the outset to be a command runner, and as a result, it is much easier to pick up the just language and make a quick set of commands that can be run. By leaving behind the old tool, a new tool can be made that better fits the tasks that people use the tool for.

The languages

Another thing I’ve noticed is that while the language of choice for CLI tools used to be C, More recent tooling has been dominated by Rust and Go.

Of course, there are exceptions to the pattern. TeX was written in pascal, Neovim kept C as its primary language, and there is the occasional new tool written in C or C++. But there is still a clear pattern in the language choice for newly written tools.

Now, why do you think that pattern has changed? And have these new languages led to an increase in the number of tools being written? I think so, and I don’t actually think its the languages itself, so much as the libraries surrounding them.

Both Rust and Go have healthy package ecosystems surrounding interaction with the terminal. Rust has Clap for argument parsing, crossterm for dealing with ANSI escape codes and other terminal interaction, and Ratatui for making TUIs. Go has a similar set of tools, with Cobra for CLI argument parsing, Viper for config file management integrated with Cobra, Gocui, tview, and Termui for TUIs, or Bubbletea for pretty UI components.

These libraries combined with the extra ergonomics offered by the languages themselves, make the barrier to entry lower, allowing for more people to experiment with the design and ergonomics of CLI tools.

Conclusion

If I have seen further than others, it is by standing on the shoulders of giants.

– Isaac Newton, 1675

Once again, Id like to state that I am not advocating for shiny new tools because they are shiny and new. Likewise, I dont think the old tools are bad, nor does their age alone count against them. However, new tools have the opportunity to learn from their predecessors and build upon them. In this way, the new tools are a tribute to those tools that came before; a recognition of their strengths, an acknowledgement of their weaknesses.

Now, these new tools are not the be-all end-all of the command line interface. Just because this new generation of tools improve on the old ones, it does not mean they are themselves perfect. As we use these tools, we will become familiar with them, and we will discover their sharp edges, or their common usecase will change, or we develop a new usecase entirely. And when these things happen, we will develop yet another generation of tools, one further polished and adapted to new usecases.

Appendix: the tools

This is an extremely unscientific table of command line tools that I have tried, have used, or currently use. It is assuredly incomplete, but should be broadly representative. The date data has been gathered from the first git commit where available, wikipedia otherwise, and sorting is by year first, then alphabetical.

| tool | year | language |

|---|---|---|

| ls | 1961 | c |

| cat | 1971 | c |

| cd | 1971 | c |

| cp | 1971 | c |

| man | 1971 | c |

| rm | 1971 | c |

| grep | 1973 | c |

| diff | 1974 | c |

| sed | 1974 | c |

| bc | 1975 | c |

| make | 1976 | c |

| vi | 1976 | c |

| TeX | 1978 | pascal |

| bourne shell | 1979 | c |

| awk | 1985 | c |

| screen | 1987 | c |

| bash | 1989 | c |

| zsh | 1990 | c |

| vim | 1991 | c |

| midnight commander | 1994 | c |

| ssh | 1995 | c |

| curl | 1996 | c |

| fish | 2005 | c++ (currently being rewritten in rust) |

| fossil | 2006 | c |

| tmux | 2007 | c |

| git | 2008 | c |

| the silver searcher | 2011 | c |

| go 1.0 | 2012 | go |

| jq | 2012 | c |

| fzf | 2013 | go |

| eza/exa | 2014 | rust |

| neovim | 2015 | c |

| pueue | 2015 | rust |

| rust 1.0 | 2015 | rust |

| just | 2016 | rust |

| micro | 2016 | go |

| nnn | 2016 | c |

| ripgrep | 2016 | rust |

| fd | 2017 | rust |

| bat | 2018 | rust |

| broot | 2018 | rust |

| difftastic | 2018 | rust |

| hyperfine | 2018 | rust |

| lazygit | 2018 | go |

| lsd | 2018 | rust |

| nushell | 2018 | rust |

| scc | 2018 | go |

| sd | 2018 | rust |

| bottom | 2019 | rust |

| git-delta | 2019 | rust |

| grex | 2019 | rust |

| starship | 2019 | rust |

| tre | 2019 | rust |

| typst | 2019 | rust |

| diskonaut | 2020 | rust |

| duf | 2020 | go |

| helix | 2020 | rust |

| pijul | 2020 | rust |

| zellij | 2020 | rust |

| zoxide | 2020 | rust |

| btop | 2021 | c++ |

| ast-grep | 2022 | rust |

| yazi | 2024 | rust |